Oct 20, 2022

Machine Learning as a Service, Part 1: The First Phase in Scaling for Hyper Growth

Support Experiencemachine learningnext gen supportartificial intelligence

A Combo Problem

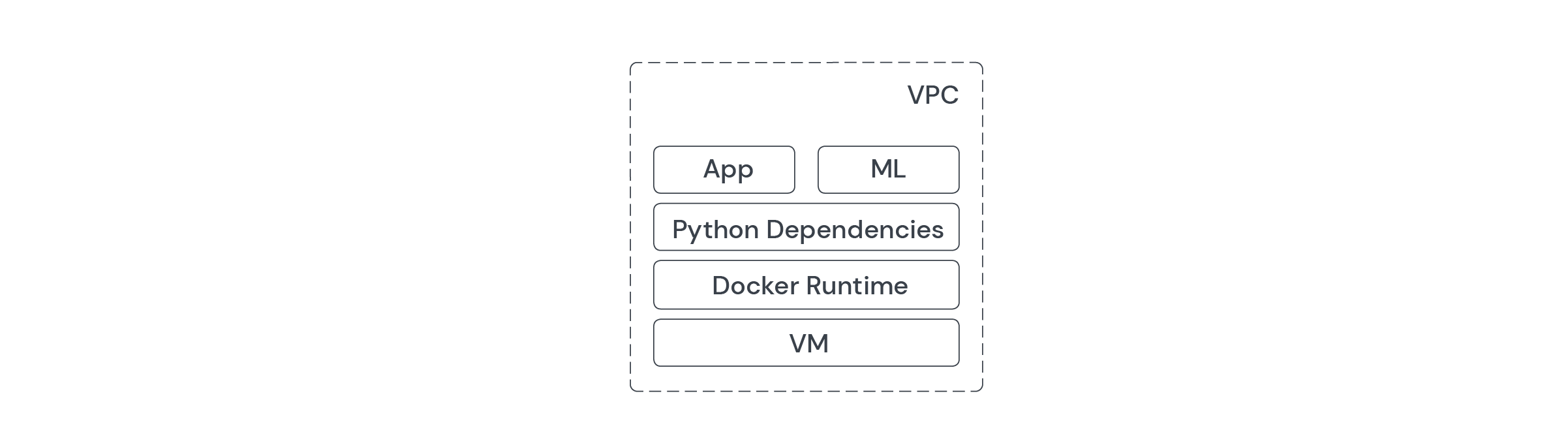

In the early days of SupportLogic, to simplify the architecture and reduce DevOps work, our machine learning (ML) models were combined with the backend code, which I will denote as the App throughout this article. For each customer, a single docker image containing both the ML and the App is produced and deployed to a production environment. Though it simplified goals and helped SupportLogic grow to a Series B company, coupling ML with the App became more and more challenging to manage as we step into a hyper-growth phase. This coupling became a challenge for the following reasons:

- The App carries unnecessary, heavy dependencies that only the ML requires: spaCy, SentenceTransformers, HuggingFace models, scikit-learn and more.

- The App and the ML are deployed onto the same VM and compete for resources like CPU & memory

- It’s impossible to independently scale the App and the ML

- The additional overhead of provisioning the ML when onboarding a new customer

What is ML as a Service?

To solve the problems of the existing architecture, we analyzed our plan for hyper growth and decided to pivot to ML as a Service. The core purpose of ML as a Service (MLaaS) is to:

- Decouple the ML from the App

- Establish clear RPC interfaces for integration between the ML and the App

MLaaS provides the following benefits:

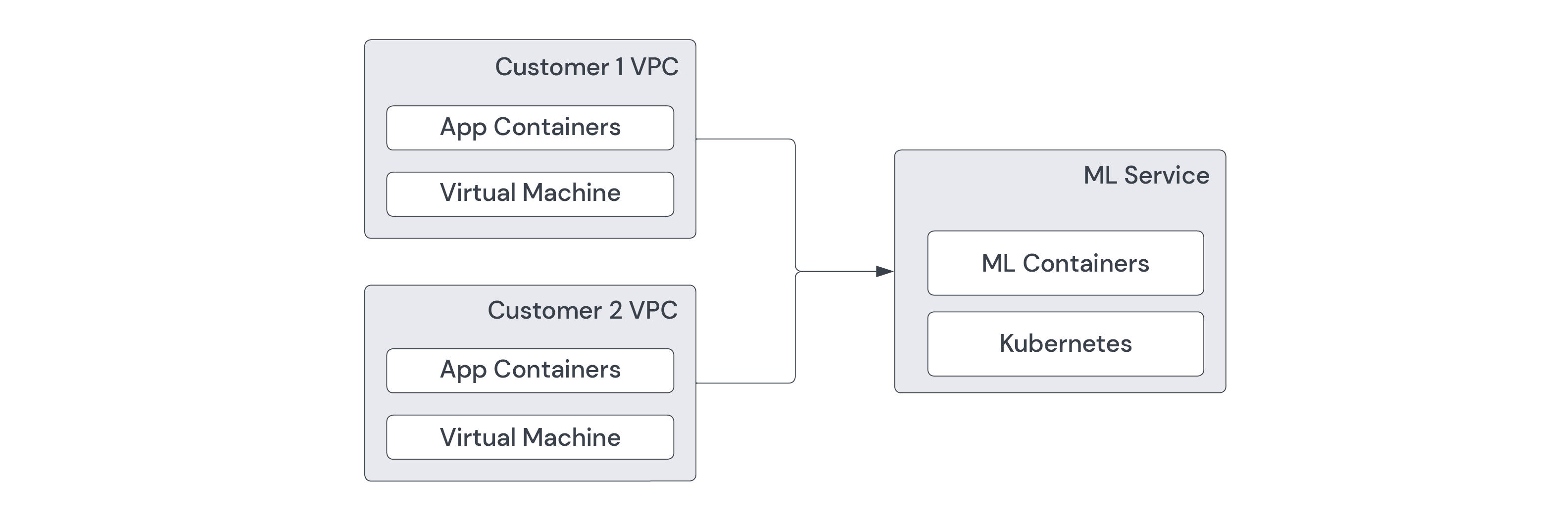

- We can separate build & runtime dependencies between the ML and the App. For example, two different docker images are produced by two different building instructions and deployed to different production environments.

- Because the ML runs inside its own environments and so does the App, we could easily scale up/down one without impacting the other.

- The homogeneous ML inference architecture reduces the overhead of provisioning the ML during customer onboarding.

The Vision for MLaaS

Our vision for ML as a Service is to build a platform that:

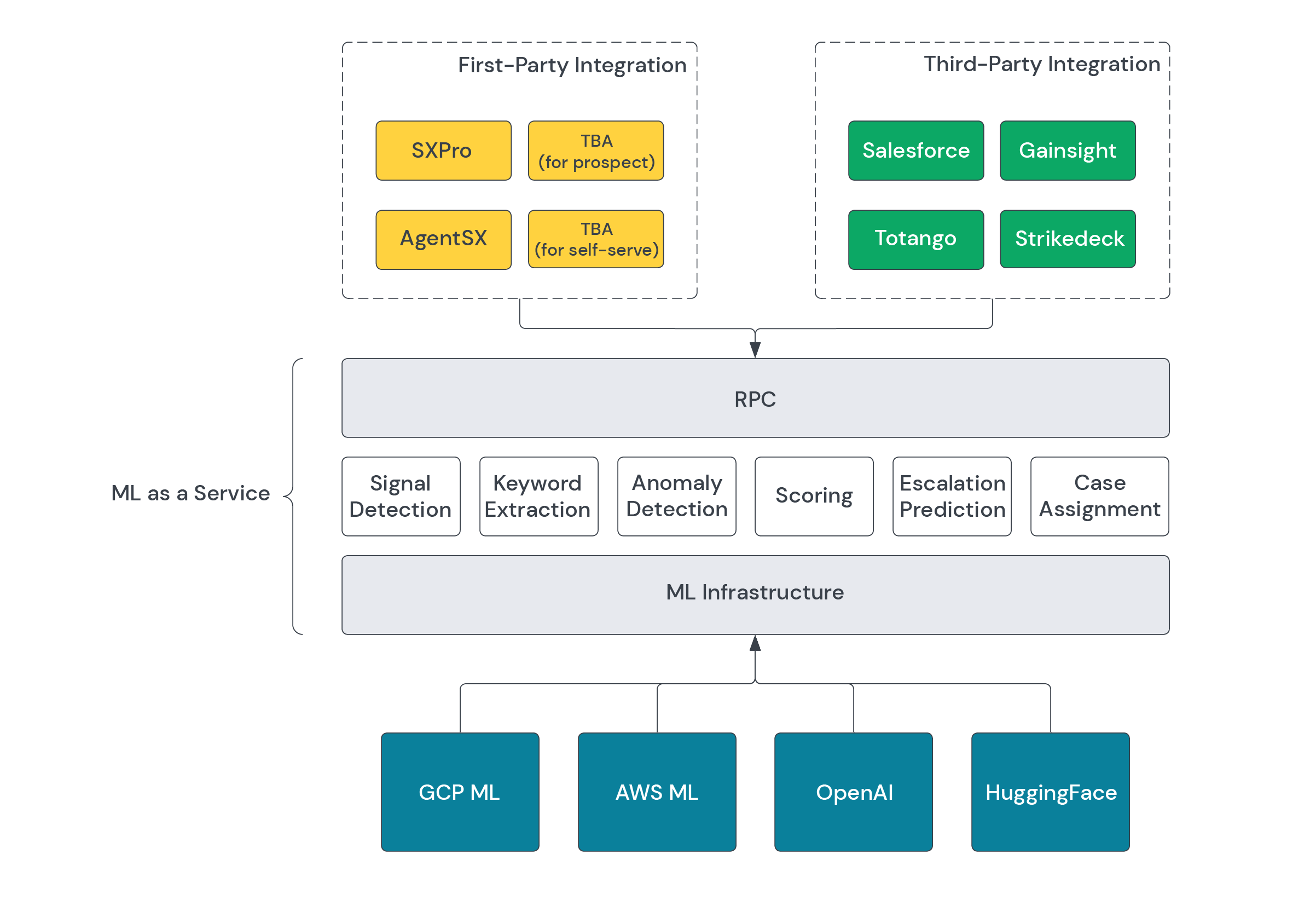

- not only includes all the cutting edge ML functionalities developed by SupportLogic like signal detection, escalation prevention, and intelligent case assignment but also integrates with ML service providers such as OpenAI, Google Cloud AI, AWS ML, Azure ML and HuggingFace.

- Expose all functionalities through clear RPC interfaces so that both 1st and 3rd party applications can integrate with the platform

How We are Building MLaaS

I’m excited to share how we are building MLaaS, the technology we’re using, and the lessons learned in upcoming blog posts. Stay tuned!

Don’t miss out

Want the latest B2B Support, AI and ML blogs delivered straight to your inbox?