Feb 10, 2023

Machine Learning as a Service, Part 3: Deep Dive on Design and Monitoring

Support Experiencemachine learningnext gen supportartificial intelligencebuild vs. buy

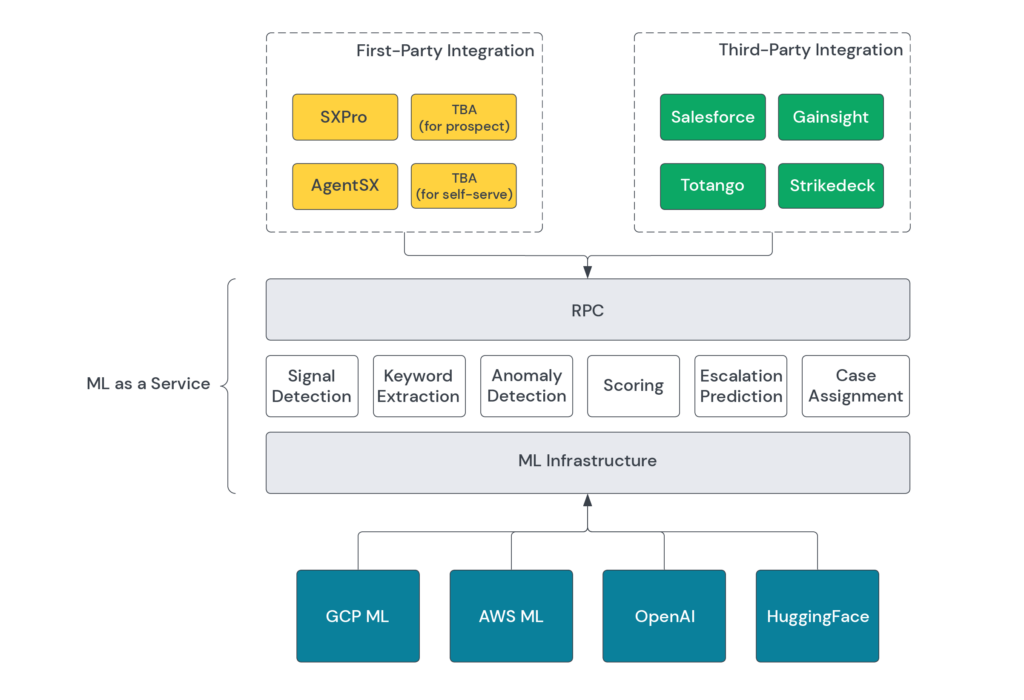

In previous installments of this MLaaS series, we’ve explored design considerations, endpoint management, data integration, and the deployment of API endpoints.

MLaaS has dramatically increased the speed at which we can launch new products. We can now deploy new ML models in 5 minutes, and run sentiment detection on any custom CRM field.

In part 3 of this series we’ll explore model monitoring, which includes alert, drift, and feature attribution detection. We’ll also dissect the platform used to streamline MLaaS.

Escalation Model and ML Infrastructure

To understand the design choices, let’s start with the ML model and the data we use to train the model. We’ll then address the ML infrastructure needed for our modeling, move to MLaaS, and then to deployment.

ML infrastructure is important since many models are trained to specific customers and have unique experimentation requirements.

Model

The Likely to Escalate (LTE) model is a gradient-boosted tree trained on the temporal data of customer case activity (and more).

Data

The data is mostly a timestamped dataset of customer case activity. We examine various case activities, sentiments, and attention scores at various timestamps of case history to create a snapshot of cases and use it for training. We also actively tinker with the present feature set and add more features every cycle to increase our overall metrics.

Because of the nature of both the data and model, our model and features can decay with time. Thus, features must be monitored and compared with training data to understand skew and drift. As production data distribution starts differing from what the model was trained on, our predictions will also get worse.

MLaaS Design Considerations

This system was designed to align not just with consumer applications but also with developers and data scientists. In other words, we wanted it to be easy to use by consumers as well as for training and productizing the model.

Access to ML Tools

Data scientists need an array of tools for iterative experimentation and must be able to run big data workloads to load data and train the model. For this reason, we scaled model training for experimentation with different configurations, compared them, and built robust data pipelines that can be configured and reused.

Ease of Deployment

We designed the microservice and deployment to be as lightweight as possible, but also followed all concepts of repeatability, rollback, and proper audit trail. The primary design consideration was a requirement to be able to rapidly deploy models by a data scientist without ops assistance.

Model and Prediction Monitoring

Once a model is deployed, we want to make sure the model is performing at the same standard as in the training and evaluation setting. The following deviations are monitored in the feature set:

- Training-serving skew: The feature data distribution in production deviates from the feature data distribution used to train the model.

- Prediction drift: The feature data distribution in production changes significantly over time.

For all these considerations, we use Vertex AI as the platform for implementing the escalation model API and overall ML infrastructure.

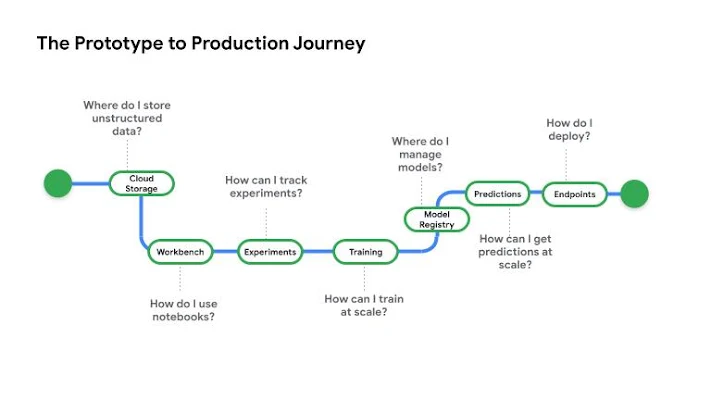

Vertex has been touted by Google Cloud Platform (GCP) as an integrated platform for experimentation, model training, deployment, and monitoring. It includes suites of features beneficial to both data scientists and MLOps:

Vertex AI aims to solve challenges regarding many stages of a model’s development lifecycle. Streamlining all ops workflow allows us to focus on experimentation.

The following are various components that help ease the path from experimentation to production.

Vertex AI offers various products to streamline various requirements

- Feature Engineering: Vertex feature store offers rich functionality for feature selection and monitoring for drift. Our models use upwards of 65 feature sets with features added every cycle. We pick the best features while monitoring feature quality. Feature store provides a centralized repository for organizing, storing, and serving ML features.

- Training: Vertex training pipelines offer various ways to orchestrate model training in the cloud and facilitate rapid experimentation and evaluation.

- Model management: Used for adding and managing various models and automatic evaluations.

- Model serving (MLaaS): Used for deploying models as an endpoint for consumer use. Vertex deploys the model as API with relative ease, using Vertex Endpoints without too much setup. We use custom models with a custom docker image, a one-time setup that works with all of our models. Vertex Endpoint also supports the following features:

- Autoscaling: The endpoint has a minimum of 1 instance with autoscaling.

- Traffic splitting: One endpoint is capable of running multiple models and can distribute oncoming traffic based on traffic settings. This is particularly beneficial for A/B testing among different models.

- Model Monitoring: As the model depends on temporal features, we needed a system to understand data drift and feature attribution. Vertex offers a rich monitoring tool for detecting skew and data drift for every feature. While we mainly use skew between training/validation and production data, Vertex uses different approaches based on feature type:

- Numerical features: A calculated baseline distribution of numerical features from training data. Model monitoring divides the range of possible feature values into equal intervals and computes the number or percentage of feature values that falls in each interval. It then calculates the statistical distribution of the latest feature values seen in production. The distribution of the latest feature values in production are compared against the baseline distribution by calculating the Jensen-Shannon divergence.

- Categorical features: The computed distribution is the number or percentage of instances of each possible value of the feature. The same is then done for production data and uses L-infinity distance as a distance metric. This is a sample of what feature monitoring alerts look like:

Drawbacks and a comparison to other infrastructure

Let’s explore some shortcomings and comparisons to other infrastructure and discuss why we are using Vertex:

Vertex Endpoints can’t be scaled down to zero instances. Unlike CloudRun, Vertex endpoints cant be scaled to zero instances. Hence, they always incur some cost for running them AND we used vertex regardless of that because LTE models prediction works similarly to monitoring service. We periodically run this model for every open case which means we have deterministic usage of the API at a particular cadence all the time.

Escalation Model API

The escalation prediction model API uses a custom model built on our own docker container. We use a custom docker image as mentioned above, requirements for building a custom docker image for the vertex are the following:

- PredictionRoute endpoint: The model prediction endpoint is defined to accept the endpoint and output class probabilities. The model prediction endpoint is defined to accept the endpoint and output class probabilities.

- HealthRoute endpoint for Vertex to monitor the endpoint health. Vertex will ping this endpoint periodically to get health stats for the container. We use Simple endpoint for checking if the server is still running.

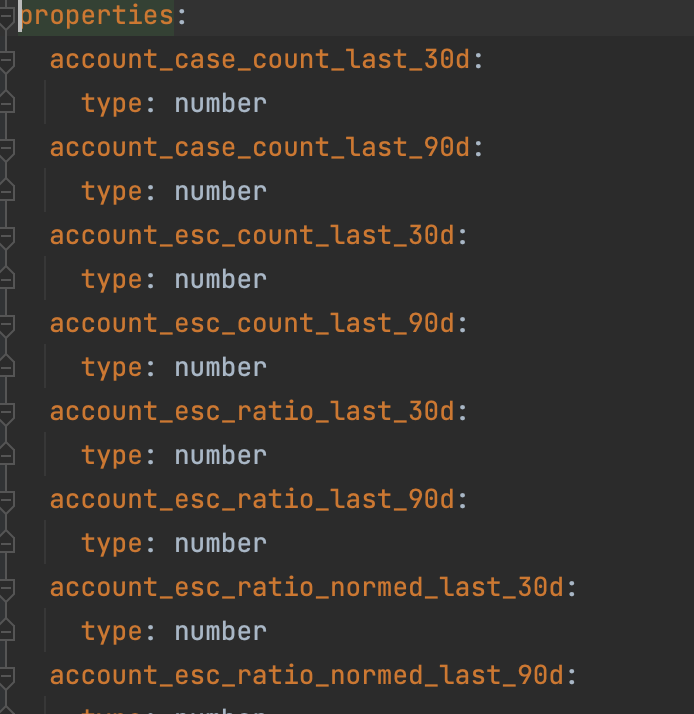

- Prediction schema of request is used to understand the shape of features in a request for the model. This is also used by model monitoring to parse feature values and apply statistical models. The escalation model defines the following features we use as escalation input_schema.yaml

Ease of Deployment

We wanted to design deployment as lightweight while maintaining Ops principles of repeatability, rollbacks, and proper audit.

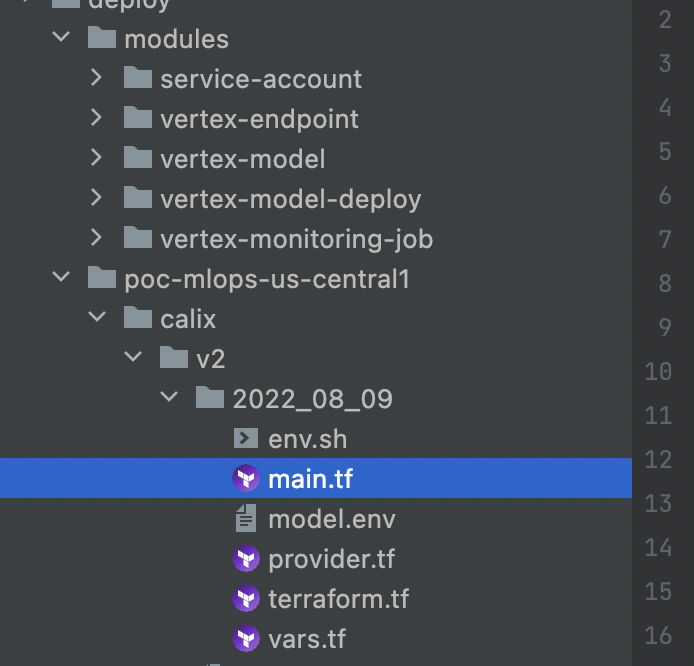

For deployment, we are using Terraform for those principles outlined above, along with the benefits of modularity and reusability. Once a terraform module is created, it can be used for different projects.

Terraform module build

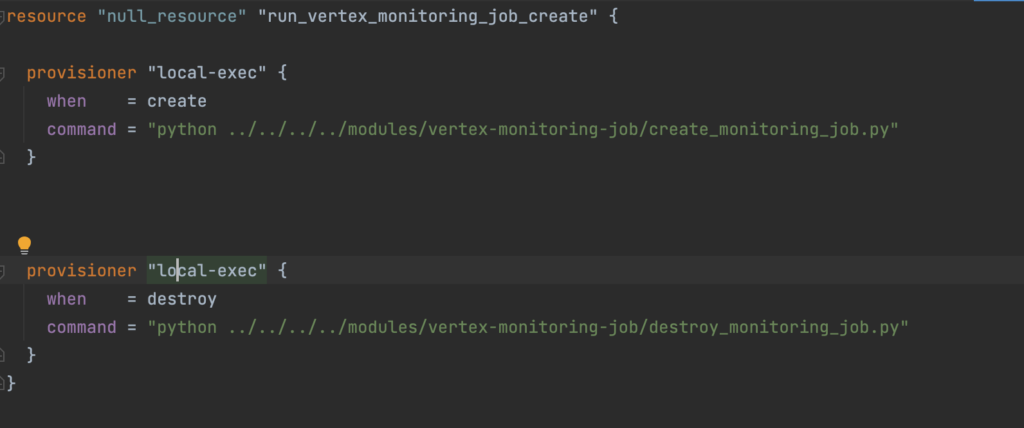

Terraform doesn’t have great support for Vertex, so a few modules are missing in the terraform registry. We compensated those by using terraform provisioners that wrap GCP AI python SDK around terraform apply and destroy.

Provisioners are ways to call user scripts during terraform apply and destroy.

- Vertex Model: Deploys the custom image of the escalation model as a Vertex Model artifact. Provisioner wrapping google.cloud.aiplatform.Model.create to create a new vertex model.

- Vertex Endpoint: Deploys a new/updated endpoint. https://registry.terraform.io/providers/hashicorp/google/latest/docs/resources/vertex_ai_endpoint

- Vertex Deploy Model: Deploys the created model to the created Endpoint. Provisioner wrapping google.cloud.aiplatform.Model.deploy to create a new vertex model.

- Vertex monitoring job: Deploys a monitoring job on the endpoint using model feature schema. Provisioner wrapping aiplatform.ModelDeploymentMonitoringJob.create to create a new monitoring job.

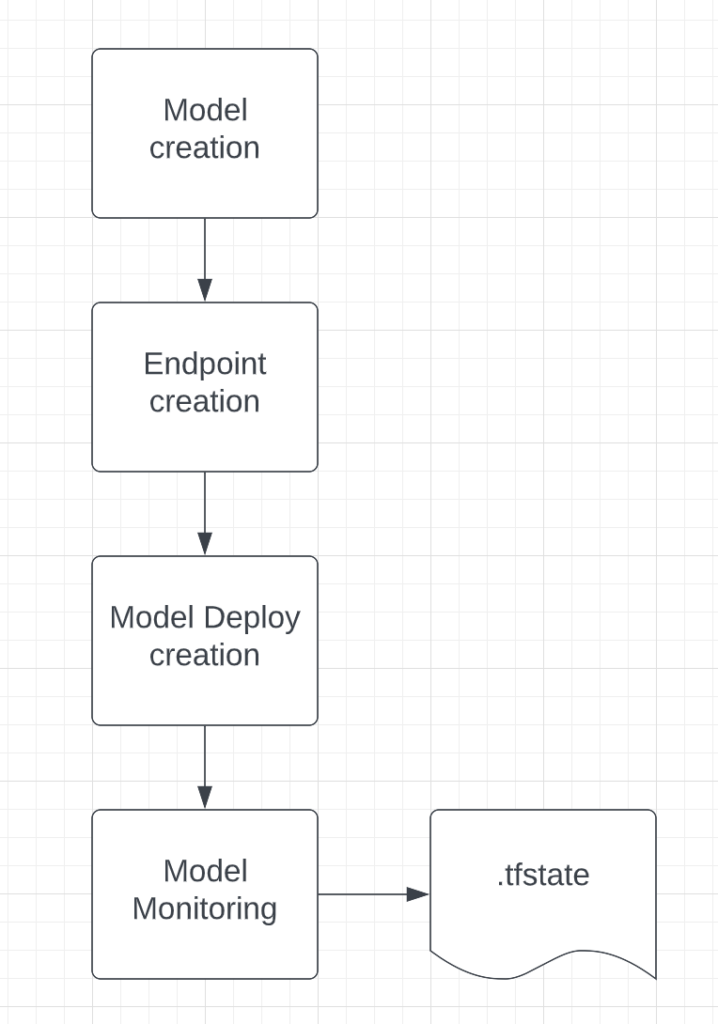

Terraform apply works in stages, first creating the Model and Endpoint then deploying the model to the endpoint, and then enabling the monitoring job on the endpoint.

Terraform destroy works completely opposite, first deleting the monitoring job then un-deploying the model from the endpoint, and then deleting both the model and endpoint.

Lessons Learned

MLaaS infrastructure not only helps us scale up and cross-pollinate different products (through MLaaS) but also makes experimentation easy, thus increasing the output of innovation and research.

Modern ML platforms are getting more streamlined, but not without constraints. Here is a summary of some of the lessons we’ve learned:

- Vertex is a constraint due to scaling options, especially since we can’t scale instance zero. So using this feature requires some invigilation of Endpoints and making sure we destroy Endpoints that are not being used. Another constraint is that request routes are already hardcoded in Vertex. Except predict and explain, you can’t define any custom endpoint route.

- There isn’t a single product that will fulfill every requirement, so it’s better to have multiple ML infrastructure suites for different purposes.

- Terraform, although really powerful, is still not mature enough to be fully used for Vertex AI and other GCP products. This is because there are modules that are not fully supported in the Terraform GCP registry.

Next Steps

We are planning a suite of ML features intended to shorten the time from experimentation to production, including offloading training to a distributed training pipeline for the following use-cases:

- Model training for new models involving hyperparameter tuning and feature selection. Also for running big datasets with parallelization and distributed training.

- Model refresh for already existing models based on data drift and model alerts. While we do deploy alerts and model monitoring, we want to eventually trigger model training automatically.

- Automated training for new customers and automatic valuation pipeline.

- Match customers with the existing models using automated evaluations

- Auto-generate new models for new customers during the onboarding process

This concludes part 3 of our MLaaS series – stay tuned for part 4 and be sure to revisit the previous installments.

Don’t miss out

Want the latest B2B Support, AI and ML blogs delivered straight to your inbox?